TL;DR Book a Meeting | Blue Sky Consulting

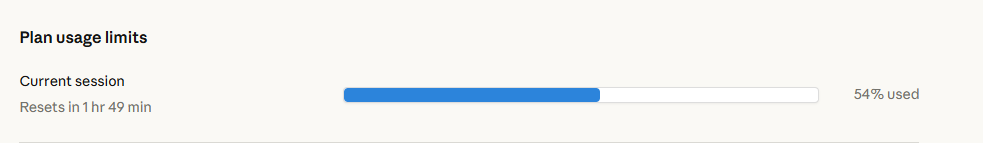

It was time to re-jump on the Vibe coding bandwagon again. I fell off somewhere two years ago, when I was between jobs. In that period, AI was relatively new, and I created an API that took a humble CSV file from the HomeWizard app and calculated what my electricity bill would look like with an hourly changing rate. For me, it was a great success. ChatGPT successfully created an Azure function that listened to https requests. It successfully opened and read the CSV file and only stumbled on the actual algorithm (which I was able to fix in 5 minutes. The entire thing took about 15 minutes, which I would have taken a lot longer with all the cruft that had to be handled. I wanted to create a website around that API, and AI failed to get that up and running in any meaning full time.

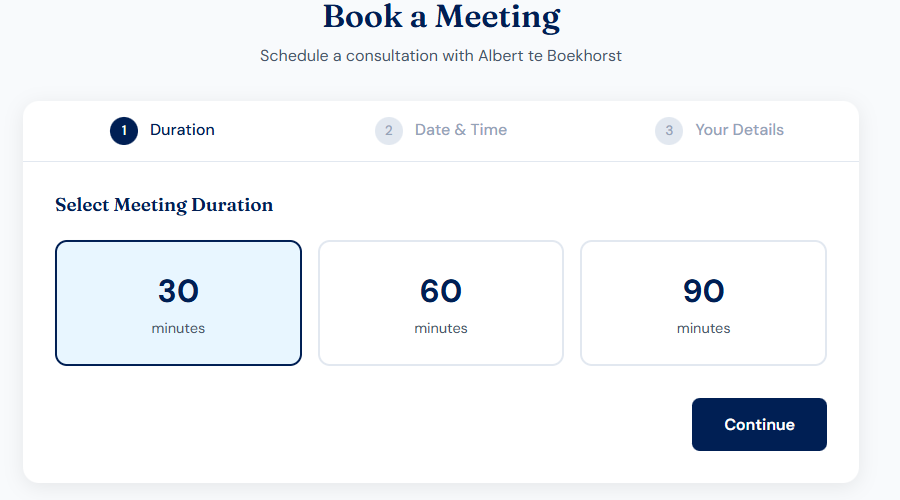

Fast forward: 2-years later in 2026. Claud opus 4.5 was the new kid on the block. I asked it to create a website for my own consulting firm: Blue Sky Consulting. It went a bit overboard with some bold claims (perhaps it was trained a bit too much on American content) that might be too out of touch with reality; however, it was/is an acceptable site. I published it as is (except for some bold claims that is) without putting too much effort into fixing some minor issues (the tiny home button is still cracking me up), and proceeded with some actual functionality: The Book a Meeting button.

Booking a meeting/lunch, drinks, I love VAR t-shirt giving ceremonies, re-connect, whatever meeting option (yes, please use it if you read this and haven’t spoken to me for a while Book a Meeting | Blue Sky Consulting), is something everyone should have on their professional site. It is actually connected to my Outlook through Microsoft Graph and sends emails using contact@blueskyconsult.nl to verify would-be-bookers’ email addresses to prevent the bot army prowling the internet to book meetings (which so far seems to be working well enough). It shows you open slots in my calendar to choose from. When the authenticity of the mail address is confirmed, it books a meeting with a MSFT-teams link and sends out the invites.

The only bug was with date-time offsets between my server and MSFT Graph; it took a few debug rounds to fix it, but it did a most acceptable job. I had a similar experience with a sync tool Claud created for me to sync my customers’ Outlook calendar with my Blue Sky Consulting calendar.

My biggest takeaway is that it would be really beneficial to set up some automation in the testing that gives feedback to Claud. Next up is fixing an old and broken-down app: mappingtrust.com. Which should be interesting, as it has enough data in there to create some actual strong test cases.

![ExperienceChange model[1]](http://www.albertteboekhorst.com/wp-content/uploads/2016/01/ExperienceChange_model1-300x104.png)